In the future, the sun is always shining, technology is ubiquitous and invisible, and you never get locked out of Jira. Right? Unfortunately, such visions of the future are unrealistic. Technology that was intended to bring us all together or make our lives easier has also been used to sow division, steal information and even facilitate hatred and violence. If you make the argument that the products that our society has built are working exactly as designed, then we need to be better at designing them!

One of the tools in the UX Research arsenal is the usability study, to see if products or features work as intended. What if we tested how our designs might work as not intended? In this workshop, you will consider how technologies could be used for purposes other than their original intent – a.k.a. an “abusability study”. Join us to play devil’s advocate in order to build stronger products for a more resilient future.

Blurb to entice signups for an Abuseability studies workshop

In 2019 and 2020 I facilitated or assisted three Abuseability studies workshops:

- IXDA Day in Pittsburgh, September 24, 2019 – assisted Anna Abovyan and Allison Cosby during their Abuseability Studies workshop

- 3M | M*Modal’s internal company conference, Closed Loop, September 26th, 2019 – facilitated an Abuseability Studies workshop

- World IA Day in Pittsburgh, February 22nd, 2020 – co-facilitated Abuseability Studies workshop with Anna Abovyan

Background

My interest in this topic was inspired in large part by Mike Monteiro, one of my favorite designers, who published a book called “Ruined by Design: How designers destroyed the world and what we can do to fix it”. Mike Monteiro is a designer who talks a lot about design ethics and designers as gatekeepers.

“The world isn’t broken. It’s working exactly as it was designed to work. And we’re the ones who designed it. Which means we fucked up.”

– Mike Monteiro

Monteiro uses the word “designers”, but he explains that he uses this term to mean anyone who makes products that will affect people’s lives.

I did not come up with the idea of Abuseability studies – I was inspired by Carol Smith, a UX researcher in Pittsburgh who first mentioned them to me. Ashkan Soltani is a former Chief Technologist of the Federal Trade Commission who may have come up with the term – or it could have been Dan Brown .

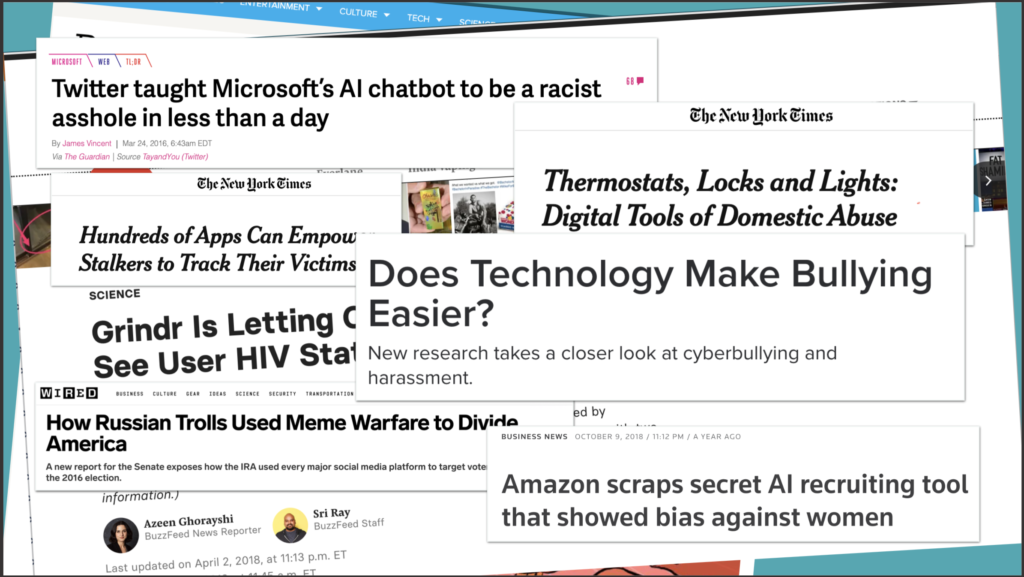

In the tech world today, there are many examples of abuse of technology. Some is done by outsiders who take advantage of the capabilities of an existing tool and use it to harm others. An example of this is the white supremacist who live-streamed the mass-shooting he perpetrated in a mosque in New Zealand.

Other abuse is by people who create the technologies and either intentionally or unintentionally create risk and harm. For example, Grinder, the gay dating app, provided data to third parties, such as location and phone ID, but also users’ HIV status and when they were last tested. No one thought not to include this info.

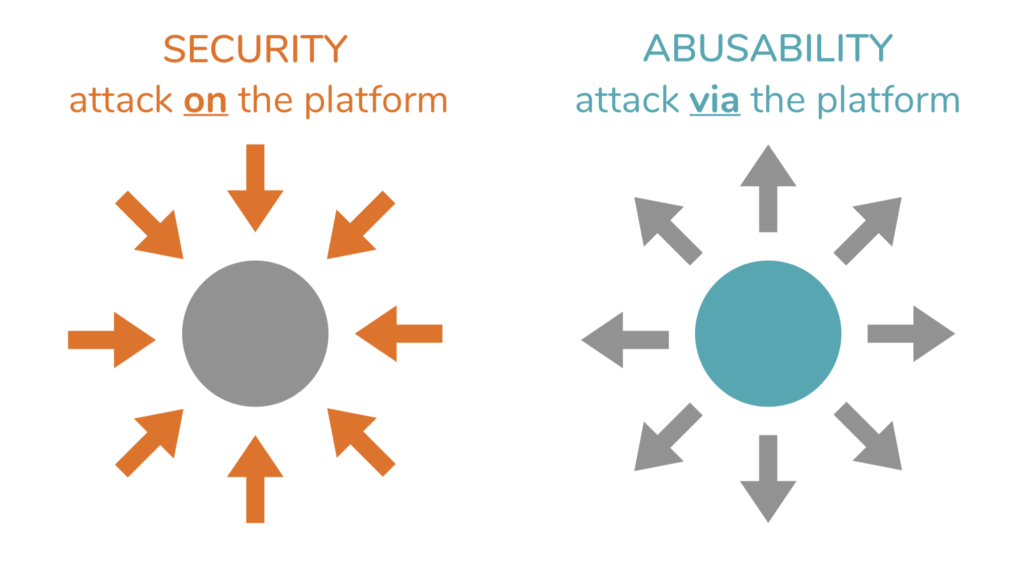

Abuseability is vulnerability to being attacked via its own platform, whereas security (or lack thereof) is vulnerability to being attacked directly. There is already a tremendous amount of effort put into security testing, including bug bounties, phishing tests, etc. The level of abuseability of a system can be tested via abuseability studies.

Methodology

Over the course of the three workshops, Anna Abovyan and I created a functioning methodology for abuseability studies which is repeatable, accessible to designers and non-designers alike and also fun!

So how can I run my own abuseability study?

One easy jumping off point is to think like a writer on Black Mirror, a show that consistently shows us the ill-effects of well intentioned software. We modelled our workshops as an exercise to write a “pitch” for an episode of Black Mirror.

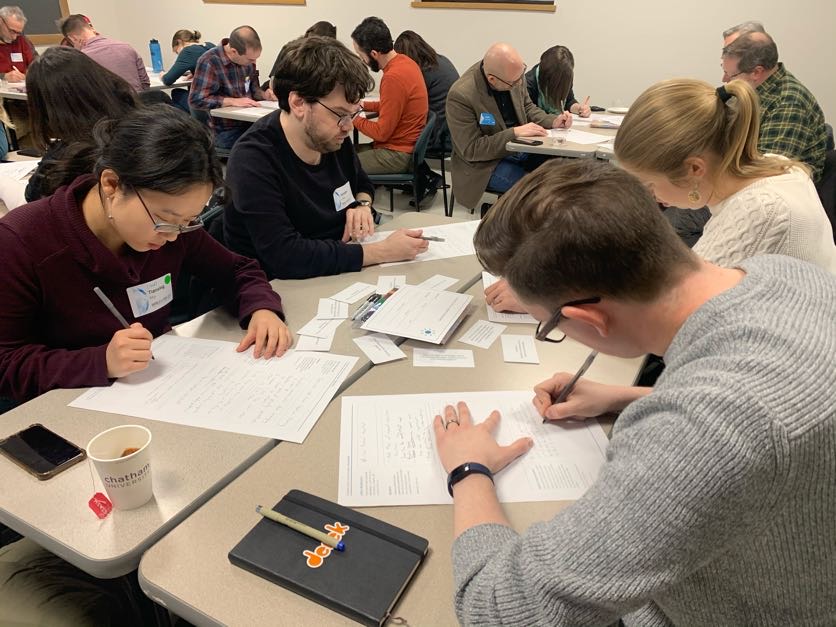

In our WorldIA day workshop, we had small groups of 4-5 people who would first complete “round robin” worksheets individually before discussing ideas and topics as a group.

The first task is for participants to come up with a technology they know well and list its benefits. When I ran this workshop internally at M*Modal, we asked participants to use the company’s existing and upcoming software and services.

Then, each participant passes their worksheet to the right. The next person thinks about actors and motivations for using that technology, answering questions like “who is affected by this technology?” “what are their motivations?”.

After passing again, the next person thinks about how this technology could be misused: “what’s the worst thing that could happen?”. If participants got stuck, we had a stack of prompts that could help, such as “what happens if the electricity goes out?” And “how could governments use this technology?”. Finally, once they had considered a bad outcome, participants filled in a “mad libs” style template to help them frame their story:

Because of (technology), (actor) who wanted (motivation), (negative outcome) happened.

Once these worksheets and templates were filled out, participants came together as a group to discuss all of the finished worksheets. They were then instructed to pick one abuseability scenario and create a 6-panel storyboard illustrating their “episode”.

After providing ample time to draw and write these storyboards, the entire room comes back together. Each team presents their storyboard and then the room discusses what could be done to prevent this terrible outcome. Facilitators write down solutions for preventing, thwarting or overcoming these abuses, and add post-it notes to relevant panels of the storyboard.

Outcomes

The finished abuseability storyboards plus post-its can be shared with product teams, translated into written form, or potentially even be made into tickets for improvements. The other crucial outcome of abuseability studies is to raise awareness of ethics and responsibility among technologists, and empower and inspire them to action. Technologists are gate keepers; we are responsible for what we put out into the world. Abuseability studies are an opportunity for us to take time out from our work to reflect, think critically and do our duty to prevent Black Mirror episodes from happening in real life.

Interested in learning more about abuseability studies, having me lead a workshop at your organization, or running your own? Get in touch with me: tk [at] theorakvitka [dot] com.